1. I am using cloudpanel and nginx and when I activate Varnish, my pages disappear from the cache https://prnt.sc/_oe2s7oLVC9-

2. When I do these settings as recommended by the plugin https://prnt.sc/rLQrzPkZvmZB

I stop working robots.txt and sitemap https://prnt.sc/Q9HJTPn2e6iu.

/calendar-page/?id=1113957581&ajaxCalendar=1&mo=6&yr=2024

The issue is not that it’s crawling through all the possible months and years (although that could be an issue too, if there’s no cap on how far it could get into the past or future); rather, the problem is the id= value which appears to be random. The crawlers end up with many copies of the same page, indexed with different values of id.

I noticed this simply because it was putting an unusual amount of load on my servers, but it’s also not ideal behavior for the widget. Why is there an id value specified at all? Getting rid of that, if possible, would help a lot. Maybe these links should have rel=nofollow too, so the crawlers won’t bother with them in the first place. Of course, one wouldn’t want to block a crawler’s ability to find a legitimate calendar entry, but surely there’s a better way than to have it scan through every month/year view, regardless. In my case, Googlebot can find all my calendar entries via my sitemap.xml, which is much more efficient.

In the meantime, I added something like Disallow: /calendar-page/?*ajaxCalendar* to my robots.txt which should help. But I’m curious to see if anyone else has a better solution.

Isn’t there a filter so that .PHP is ignored? or that only URLs with more than 2 or 3 words are listed?

Something that seems more legitimate than those damn robots.

Recently we’ve noticed that we had a website on “Discourage search engines from indexing the page” and then we turned the option off.

What we expected to happen is that all the pages now have the index,follow headers for robots.

What actually happened is that only the front page had the option updated and the rest of the pages were still cached on no index.

I think this behaviour should be adjusted and a clear cache for all pages hooked into changing this setting.

Plugin version: 6.0.0.1

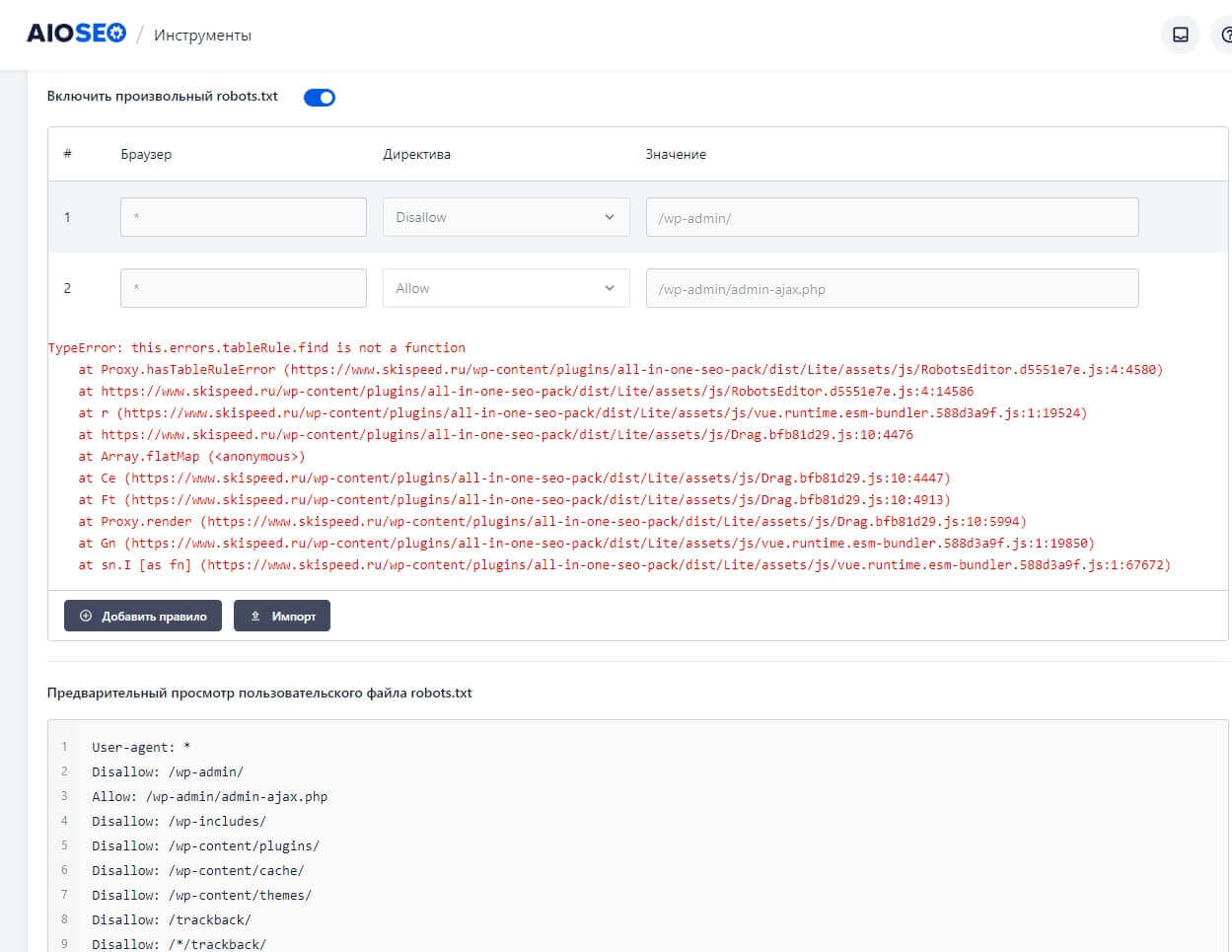

]]>Прошу помочь в решении показанной ошибки во кладке “Инструменты” robots.txt от плагина All in One SEO (бесплатная версия).

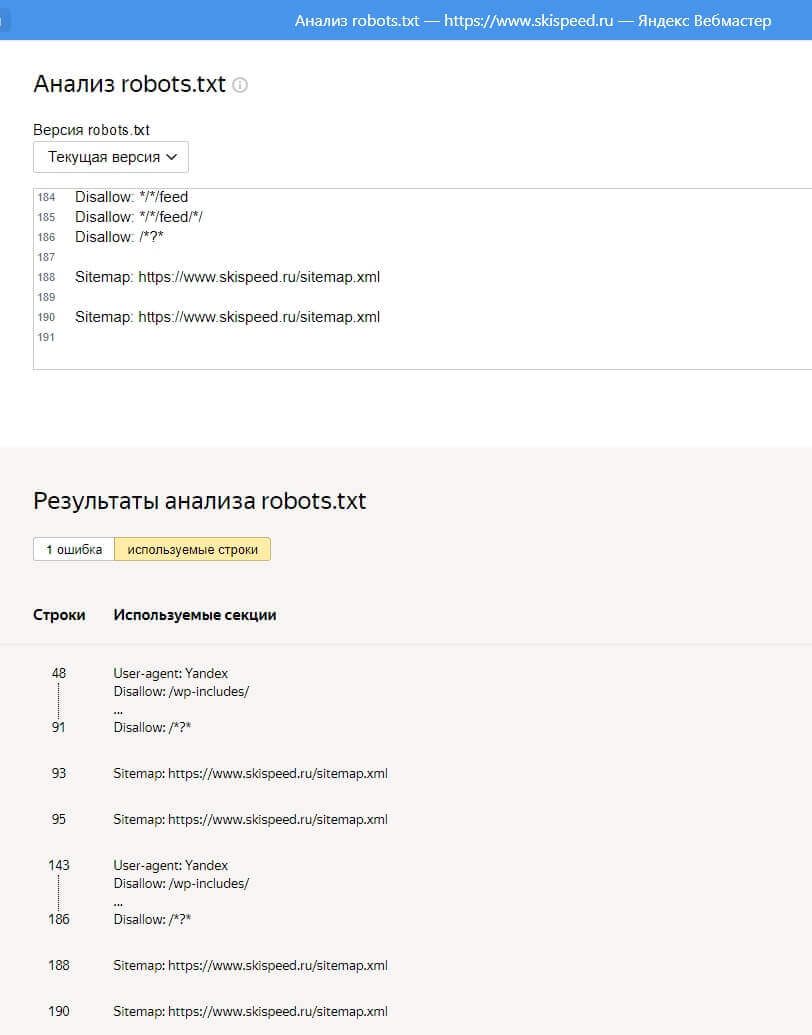

В тому же вэбмастер Яндекса тоже сообщает об ошибки!

А в его файле почему-то 190 строк!? Хотя в файле плагина All in One SEO – 93 строки

This is set in header of each user page:

<meta name='robots' content='noindex, follow' />How can I disable that (other than removing plugin)?

I want author pages to be indexed.

]]>